I gave a talk at AppSecEU 2013 on August 23rd about Minion, and talked about some of the things we are doing to make the security testing more available to the Mozilla community.

Update: You can watch the recording below:

I should put a better tagline…

I gave a talk at AppSecEU 2013 on August 23rd about Minion, and talked about some of the things we are doing to make the security testing more available to the Mozilla community.

Update: You can watch the recording below:

Once we get where we want to with Minion, we will talk more about what we plan to do with it within Mozilla, both through a public webcast, and on the Mozilla Security blog, but right now we just aren’t ready for it. Instead, I will explain Minion, and lay out my personal goals for the project.

At Mozilla I work with a team of really bright people who are working hard on some of the challenges of building secure software and managing the IT security related risks associated with a high-profile open source project. One of the biggest challenges we face is that Mozilla is a public benefit organization; this means that we don’t tend to drive the same scale of insane profits that some of our competitors do (and profits aren’t bad thing!), which means that we have to find new and interesting ways to scale; I spoke about some of these things at AppSecUSA at the end of October, and the video should be available online soon.

Adding to this challenge is the state of the Application Security workforce. For skilled AppSec people, the unemployment rate is essentially 0%. For under-skilled[1] AppSec people, it is not much higher; this provides the challenge of trying to recruit skilled, knowledgeable people to help with some of our activities. We have had a huge amount of success over the last two years in growing and building an effective team, but like every other security team, we are increasingly competing for a small number of high value individuals. (Hey, interested in applying for jobs? Look here!)

To even further complicate matters the amount of competition in the security space, and the history of the security community telling everyone that security sucks, security is hard, and they aren’t smart enough to get security right, have all contributed to the proliferation of Security As a Service vendors. Unless you have a huge amount of financial resources, compelling and interesting problems to work on, or some other incentive, it is very difficult to attract security talent when vendors are offering top dollar for people to work in their bug mills (again, not a bad thing; good quality teams are expensive, and vendors can help offer access to good talent).

For the last year I have been preaching automation to our team, and last spring we started growing our Security Automation Engineering team. Following that, we recently started to build out a new team to focus on developer oriented tools. We wanted an “security tool automation system”. We envision a tool that will provide basic automation for a range of security tools, with intelligently selected configurations for ease of use, and well thought out configuration options to allow the user to configure a range of tools by modifying a small set of values. This tool is called Minion.

Minion is intended to be disruptive.

First, it will help us break out of the pattern of running and using security tools within our team; we want all of the developers in our organization to use them. We want our developers to do horrible things to the applications and services they write, and we want it to be as easy as the push of a button. If my team is asked to review an application[2] for release to production, having passed through Minion should be one of the first steps.

Second, we want Minion to become a Security As A Service platform. Automation is not the answer to everything; In the long term, Minion should provide a solid toolkit that any security team can use to organize around. Once we finish the core feature set of Minion, we will start to focus on the team oriented aspects of the platform. It should be easy to extend, easy to adopt, and support hooking into each step of the SDLC. In short, we want to create a platform that allows any good security team to compete with the established Security As A Service players in terms of service delivery, documentation, and workflow, without constraining people to a specific philosophy or process.

Finally, we want Minion to be a framework. As an information gathering tool, it is effectively useless if that information feeds into a write-only repository. To that end we are working to define clear, extensible formats that can be used to integrate with and consume data from Minion. Want to create a fancy dashboard for C-Level executives? Minion should allow that. Want to write a module to automate generating a report worthy of a typical audit firm? Minion should allow you to do that! Want to perform data mining to find out vulnerabilities that are most common in the applications you test? Minion should allow that! By building Minion around REST APIs and modern web standards, it is possible to build any kind of mashup you can think of with the data that Minion collects and the results it tracks.

I pushed for Minion to be created, but it would still be a poorly written set of PoC scripts if it wasn’t for the hard work of Matthew Fuller, Simon Bennetts, and Stefan Arentz. These guys have done an amazing job, and I look forward to seeing how they continue to push the project forward! If you are interested in Minion, you can check out the repo, or join the mailing list using the links below!

Thanks for reading!

Yvan

[1] “Underskilled”: Securing web applications is hard. Securing applications is hard. Securing applications that people use to build web applications and access web applications is really hard. Not everyone is cut out for the job, and there is nothing wrong with that; there are a lot of opportunities for people that don’t demand staying on the bleeding edge of technology.

[2] Note that this is different from the consultative security work we do over the course of a project; Minion is not a substitute for a security program, it simply offloads some of the testing burden to automated tools and lays a foundation that the security team can look at when starting a final review before a product or service is released.

This post is cross-posted from the Mozilla Security blog.

At Mozilla we have a strong commitment to security; unfortunately due to the volume of work underway at Mozilla we sometimes have a bit of a backlog in getting security reviews done.

Want to speed up your security review request? You can dramatically increase the turn around time for your security review request by providing the information below. In addition to this, we are working to expand our overall security review process documentation; you can follow those efforts here.

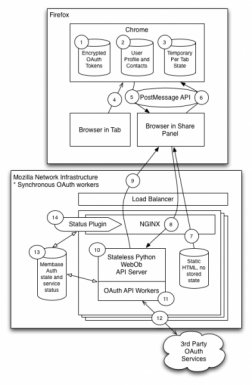

An architecture diagram illustrates how the various components of the service communicate with one another. This information allows the individual doing the security review to understand which services are required, how and where data is stored, and provides a general understanding of how the application or service works. Producing an architecture diagram is a good practice as it allows anyone to get a rapid view of how complex a system is, and can inform how much time it will take to work through a review of the system.

Note that these are just examples; the architecture diagram is intended to help the reviewer visualize what they are assessing. It doesn’t have to be a fancy diagram, and our team has worked from camera shots of whiteboards from meetings!

A Detailed Application Diagram is essentially a Dataflow diagram; a data flow diagram enumerates each application or service that is a component of a system, and provides a list of the paths that data can flow through. A dataflow diagram helps the security reviewer to understand how data moves through the system, how different operations are performed, and if detailed enough, how different roles within the system access different operations.

While there are a number of different opinions on the “best way” to do a DFD, it is more helpful to have the information than it is to focus on presenting the information “the right way”. Examples

An enumeration of data flows in the application explains how and what data moves between various components. Note that this doesn’t need to be a rigorous explanation of fields; in this case we want a general description of the message, the origin of the message (browser, third party, service, database, etc), the general contents (e.g. “description of the add-on”, “content to be shared”, etc), and a list of sensitive fields. The BrowserID Dataflow Enumeration is an excellent example.

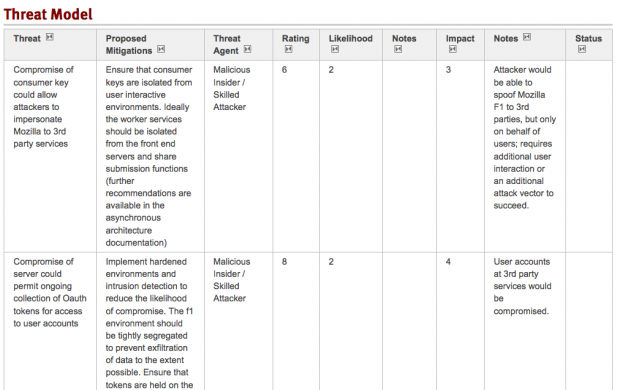

The next step is reviewing all of this information to build out a list of the threats to an application. The important bit here is that you, as a developer or contributor, know how an application or system works. You know what a good set of the failure modes of the application are, and you understand the ‘business logic’ of the application. Many developers have a working knowledge of vulnerabilities, and can identify these types of issues. In order to properly perform a threat analysis a reviewer needs to understand how the various components of the system work, what threats exist, and be able to identify what mitigating controls have been put into place. Here is an example of what a threat analysis might look like (links below):

he threat analysis should contain, at a minimum the following information:

Additional information on how we assess and rate threats will be published as part of the documentation for our risk rating and security review process. Examples:

Help us help you!

Part of determining the scope of a security review is understanding how an application works and what the risks are; the documentation described in this post helps us to understand this and will ensure that we can complete a security review as quickly as possible. Beyond that, as teams understand how security reviews are performed it gives them the opportunity to take ownership of security and build it more effectively into their own processes.

As with other Mozilla teams we are actively pursuing better community engagement and always welcome feedback.

This post is cross-posted from the Mozilla Web Application Security blog.

One of the important projects that Mozilla has been building in 2011 is BrowserID, a user-centric identity protocol and authentication service. Significant work has gone into building out and testing the infrastructure and protocol to make sure that it is a robust, open, and simple to adopt authentication scheme. If you want to learn more about BrowserID, here is a quick 12 minute video that explains what it is, and why we are doing this.

BrowserID continues to evolve as we build support for it across Mozilla properties and encourage adoption from 3rd parties. To date there almost 1000 different websites that rely on BrowserID, but we still have a long way to go to see large scale adoption!

Much of the effort we have put into reviewing the protocol and implementation of BrowserID is discussed in a recent presentation, a recording of which can be found below:

[Slides: html | html(zip) | pdf] (if the video doesn’t appear, click here!)

In addition to ongoing application and infrastructure security work in the next year, we are aiming for two significant milestones in 2012. First we will engage two third party security reviews of the Browserid.org site, and the cryptography used in BrowserID (including the protocol, the algorithms used, and the libraries we are relying on). Our objective in doing the third party review is to remain as transparent as possible in the development and review of the security aspects of BrowserID. This commitment to transparency includes:

As we proceed with this effort we will publish additional information on this blog, and we will work to keep the community up to date at each stage of progress.

Second, once we have completed the 3rd party review, resolved the issues identified, and published the results, BrowserID.org will become one of the properties fully covered by the Bug Bounty program (as always, exception bugs reported for none covered sites will be considered for bounty nomination).

This post is cross-posted from the Mozilla Web Application Security blog.

The AppSec space is an extremely challenging field to work in, largely due to asymmetry; when you play defence you have to work to stay on top of each emerging threat, vulnerability, and development that falls into your scope. Working to protect a system or application where there is fixed number of resources to spend on protecting a set of assets, choices have to be made about how to best spend those resources to prevent the attackers from winning. The best way to do that is by applying risk analysis techniques and focusing on the highest risk assets. Once those assets are identified, a decision has to be made about how to invest time and effort in design vs. implementation, static vs. dynamic analysis, and automated vs. manual testing. Regardless of the goal of continuous engagement within the SDLC, decisions are made based on the risk and the pool of limited resources must be split up to work towards a solid defence.

The biggest challenge is that we have a rapidly growing development community; while the security team is growing to meet our needs, we need to find better ways to scale testing and analysis to get the same results with better efficiency. Out of the gate, I am going to deal with one important issue by casually tossing it off to the side. Tooling is a really important part of the discussion, but the bottom line is that tools won’t make a difference in your organization if you don’t have the right people to use them. Good tools might help unskilled workers get good results, but skilled workers with suboptimal tools will still get great results. The adage “It’s a poor craftsman who blames his tools” sums it up neatly.

In order to scale up a team with limited resources (time, people, money), there are a number of things that can be done.

Each of these types of activities are already in place in Mozilla, but there is still more we can do. We perform a great deal of manual testing because once we have reached that point in the development life cycle, it is the best way to find implementation or design issues that slipped through the cracks. One area we are investigating is how to make our manual testing and analysis repeatable and reusable.

Some things we plan to do to move in this direction include:

As we get these activities up and running, we will keep the community updated on how we are progressing.